In Isolation: The Story of Containerization Origins and Evolution

Everything has a story. Sometimes it is full of unexpected twists and turns, like the detective novel plot, in others it is quite simple and uncomplicated. The 2 most popular containerization systems, Docker and Kubernetes, also have a history. Today's article is about the emergence and evolution of these famous technologies. Both they trace their roots back to VM which had their beginnings in the 60s. The first experiments in this area were done by IBM in Yorktown Heights, they developed software emulators for IBM 7040 computers that allowed to run multiple instances of an app on a single machine. In the future, these emulators were to facilitate the development of hardware-independent programs, and to make it easier to port code to machines with other architectures. Virtualization was required for improvement of high-level programming languages: it helped to create the execution environment for user's apps, to carry out the operating environment replication, to emulate the system of processor's instructions.

If the emergence of VM was the starting point for the development of containerization systems, the turning point in their history was certainly 1979, when AT&T and Bell Laboratories developed version 7 of Unix. Among other innovations, this version introduced the chroot system call, which changed the location of the root folder of a process and its children. This laid the foundation for process isolation and file sharing for every process in Unix. A process running in the system was given its own memory allocation and file descriptors. Additionally, inter-process communication (IPC) technology was introduced. In 1982 the chroot was incorporated as part of BSD, and this allowed similar isolation mechanisms to be used in derived systems. Nevertheless, Unix still had a single process space, network stack and IPC for all users, which could not prevent one application from hijacking system resources, and could affect the work of other OS users. In other words, the task of organizing effective kernel control over resource usage by processes was still relevant.

FreeBSD Jail

The next step on the road to modern containers was taken in 2000 by R&D Associates, a small private company offering shared hosting services. This provider was trying to solve an important technical problem: to separate its own resources on the server from the projects of its clients, so that they could work independently and not interfere with each other. R&D Associates' servers used FreeBSD, so the Jail technology they created worked with that OS.

Jail was a virtualization system that allowed you to run multiple instances of FreeBSD inside FreeBSD, which used the same kernel, but their own independent environment and set of applications. Accordingly, such an isolated system had its own settings, allowing such a "virtual server" to be configured to meet the needs of the user. The process running inside Jail runs in a kind of "sandbox", while the isolated system itself uses a local set of files, processes, user and superuser accounts. From the inside, it is virtually indistinguishable from a real operating system.

This technology was first introduced in FreeBSD version 4.0, released on March 14, 2000 - that version introduced the jail utility and system call. Prior to FreeBSD 7.2, each isolated OS instance required a dedicated IP address to run, but this version introduced support for multiple IPv4 and IPv6 addresses for each VM and the ability to map a "container" to specific processors on multiprocessor servers.

Linux-VServer

For a year, FreeBSD Jail was considered the most advanced *nix-based virtualization system until its Linux counterpart, VServer, appeared in 2001. Like Jail, VServer allowed sharing OS resources by adding virtualization features to the Linux kernel. Programmers Jacques Gelinas and Herbert Pötzel are considered the creators of VServer, and the product itself was distributed for free under the GNU GPL license as open source software.

Each isolated copy of the system was called a "security context" in Linux-VServer terminology, and the system running in it was a VPS. The VPS was run by a chroot-like utility. Actually, starting a VPS was just a matter of initializing an operating system in a new security context and stopping it was just a matter of destroying all the processes that were bound to that security context.

Linux-VServer quickly gained popularity among shared hosting providers, where VPS based on different Linux distributions, first of all, Debian, were created under this technology.

VPS built with Linux-VServer could use a common file system and common file sets (via hard links), which saved disk space. Processes on the VPS ran as normal processes on the host system. Using Linux-VServer required changes to the Linux kernel, and this tool lacked clustering or process migration capabilities, so the host kernel was a single point of failure for all VPS. In addition, some system calls and parts of the /proc and /sys file systems remained unvirtualized. All of this together can be considered a drawback of Linux-VServer compared to modern containerization systems, which provide a much higher level of isolation.

In 2005, another virtualization technology for Linux called Open VZ (Open Virtuzzo) appeared. It also used a patched Linux kernel and allowed to run multiple copies of an OS running a single kernel in so-called "virtual environments" (VE). Despite some popularity, Open VZ never became part of the official Linux kernel.

First containers

First containers

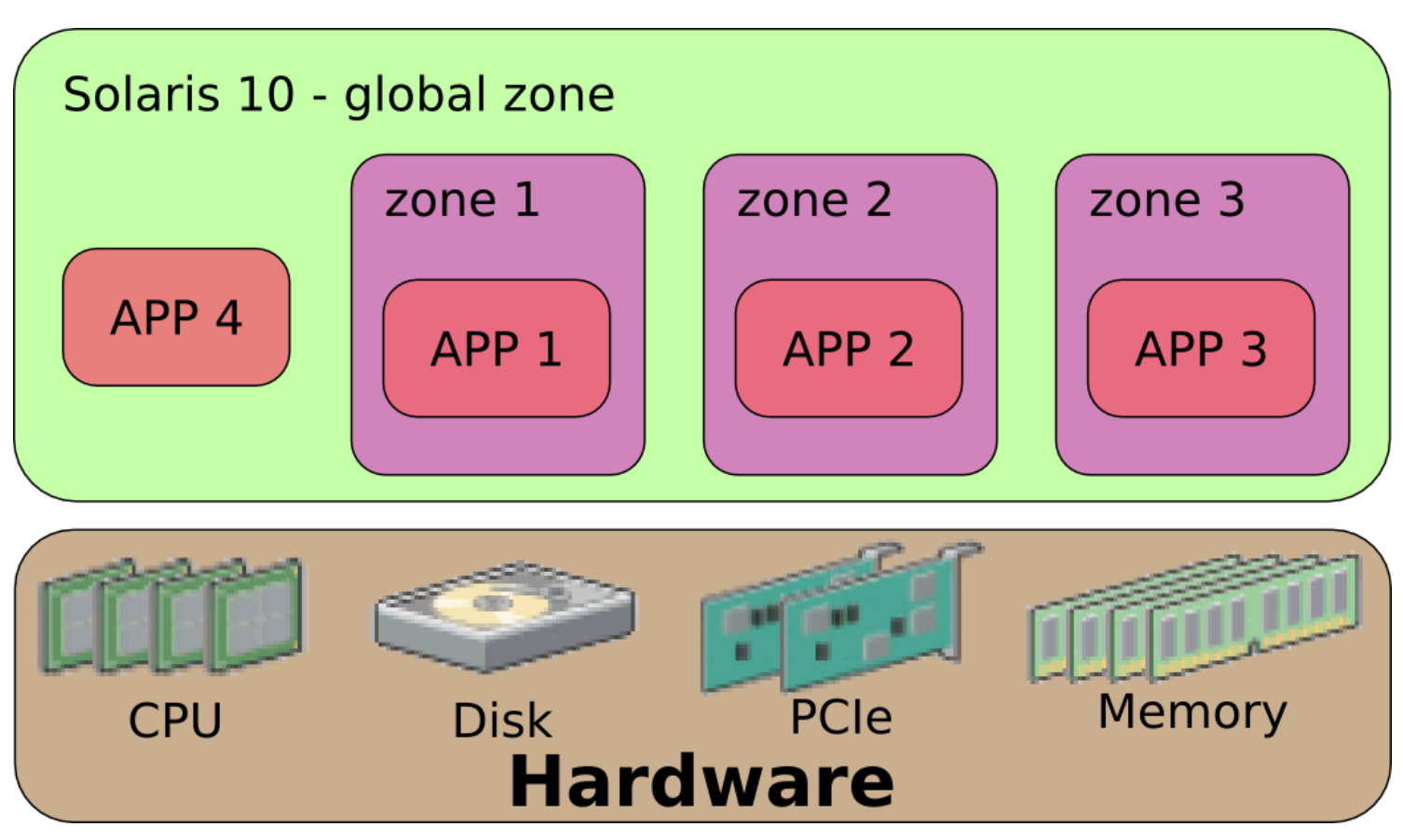

The first containers, officially called by this term, appeared in February 2004 in Sun Microsystems' Solaris 10 OS, and were used on x86 and SPARC servers. Unlike the previously described virtualization systems, Solaris Containers included not only isolated "sandboxes" to run the OS (called "zones" in the developer's terminology), but also system resource management tools that allowed the creation of "snapshots" of individual zones and their cloning. That is, orchestration mechanisms.

Zones were completely isolated cloud servers within the host OS. Such OS instance had its network name, used dedicated network interfaces, its own file system, a set of users (including root) and configuration. The virtual server did not require hardcoded memory or CPU allocations - hardware resources were shared, but if necessary, the administrator had the ability to reserve certain server capacities for a specific zone. Processes inside containers ran in isolation, had no access to each other and therefore could not conflict.

The main difference between Solaris Containers and its predecessors was that, as before, virtual OSs used the host system kernel, but the administrator could run copies of the system in containers with their own kernel if desired. This was the next important step in the evolution of containerization technology.

Контейнеры от Google

In 2006, Google developed its own resource sharing system called Process Containers. It was later renamed control groups, or cgroups for short, and later incorporated into the Linux kernel.

Technologically, cgroups are a group of processes for which restrictions are imposed at the operating system level on the use of various resources - memory, I/O, network. Such isolated groups of processes can be combined into hierarchical systems and managed. Cgroups realize not only isolation, but also prioritization and strict accounting. Special management mechanisms are used to control groups: it is possible to temporarily "freeze" a selected group, create its "snapshot" and restore the container state from a control point. Based on this technology, the LXC system was created two years later.

LXC и Warden

Linux Containers (LXC) is a complete container system for Linux which provides full virtualization at the OS level. It was developed by programmers Daniel Lescano, Stéphane Grabe and Serge Ayune in 2008. LXC relied on cgroups and the namespace isolation principle supported by the Linux kernel since version 2.6.24. LXC allows containers to run at OS level, using their own process and network space, without having to create a full VM.

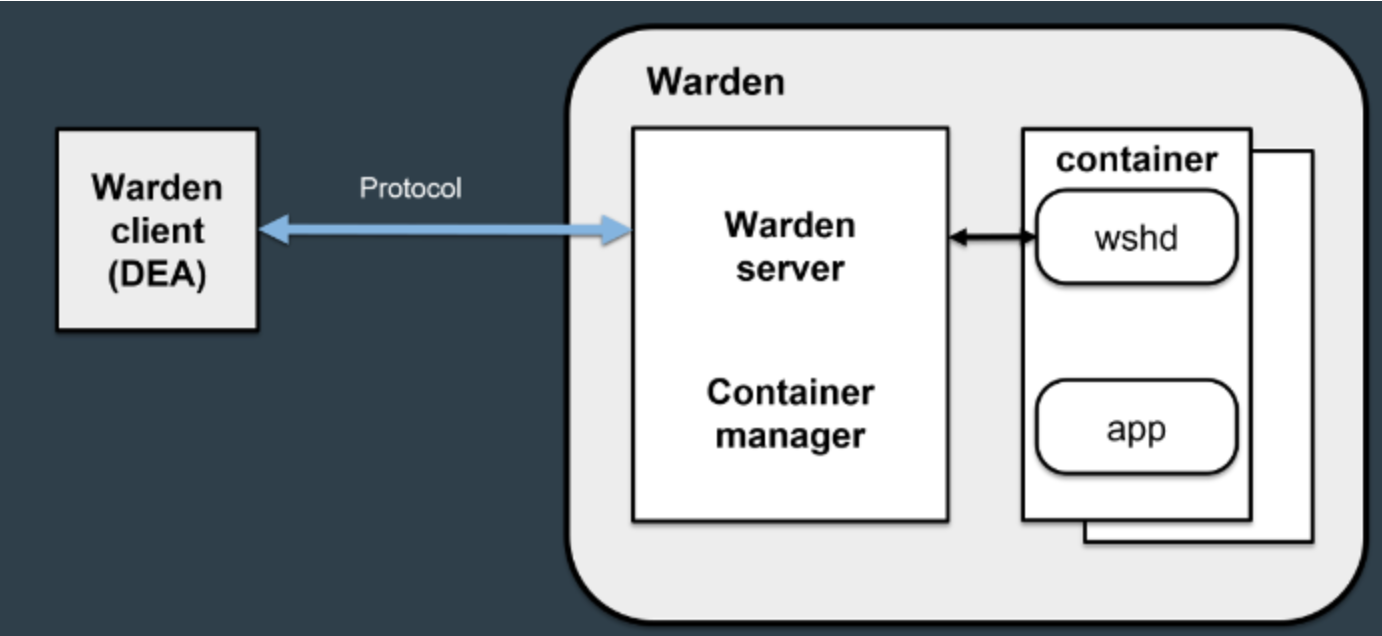

Continuity is one of the hallmarks of IT development, and in particular of open source products. LXC has been the basis for several projects to implement isolated containers not only in Linux, but also in other operating systems. One of these was Warden, released in 2011 by the CloudFoundry community. Warden allows you to isolate environments in any OS, running as a daemon and providing an API to manage containers. CloudFoundry created a client-server model for orchestrating a set of containers across multiple hosts, part of which was Warden, which provided users with a service for managing cgroups, namespaces and process lifecycles in isolated environments. The Warden developers later replaced LXC with their own virtualization system.

The second project that took LXC as its basis (but later also abandoned this technology in favor of its own development) was the Docker project, launched in 2013.

Docker

Work on Docker began at the startup incubator Y Combinator in 2011, with programmers Kamel Funadi, Solomon Haiks, and Sebastian Pahl as its creators. Unlike its predecessors, this containerization system was originally conceived as a commercial service for cloud infrastructures, available to customers on the SaaS model.

The first commercial company to use Docker for its internal projects was the French cloud provider dotCloud, and the product was presented to the general public at the PyCon conference in Santa Clara in March 2013. Already on September 19 of the same year, Docker announced a partnership with Red Hat Corporation to integrate the product into Fedora, RHE Linux and OpenShift. A year later, in Docker version 0.9, LXC was replaced by its own library libcontainer, written in Go programming language.

Since Docker appeared on the market and many cloud providers implemented the service of providing containers to customers as a service, the popularity of this technology began to grow rapidly. In fact, Docker has become the founder of the entire ecosystem for container management. One of the brightest representatives of this family is Kubernetes.

Kubernetes

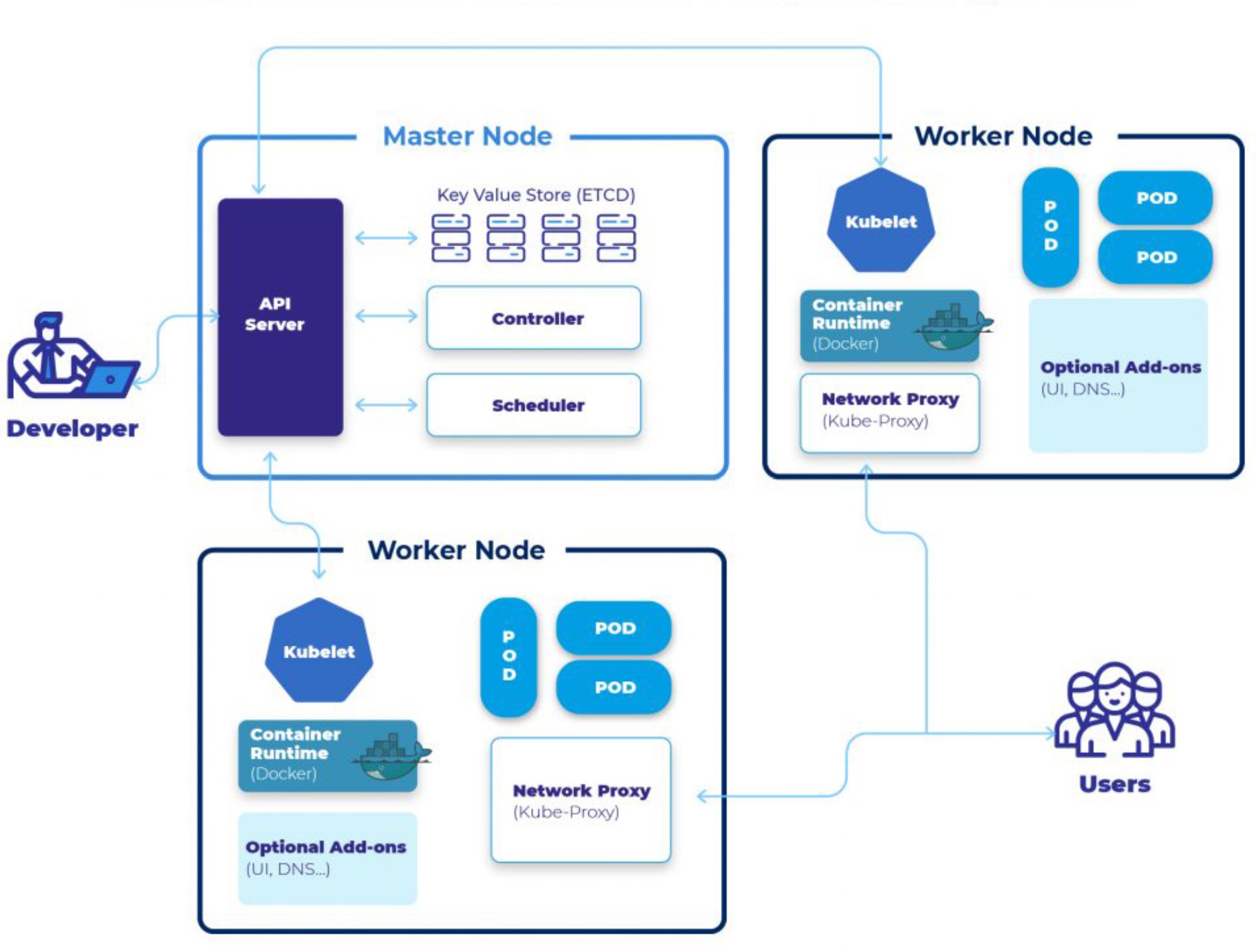

Launched in June 2014, Kubernetes has quickly become the most popular system for deployment automating, scaling and management of industrial-grade containerized applications. This open-source software allows you to manage entire clusters of containers as a single entity.

Initially, this product was born in the bowels of Google for internal needs - the corporation needed a reliable system to manage clusters of VPS serving the search engine and other busy company services. The first project version was code-named Google Borg after one of the heroines from the science-fiction series Star Trek, but later it was renamed Project Seven. Developers Joe Beda, Brendan Burns, Craig McLuckie, Tim Hawkin and Brian Grant chose a naval helm with seven handles as the logo. However, this name did not seem too catchy to Google, and it was decided to change it: the new name of the product was the ancient Greek word - "helmsman". Often the numeronym k8s is used instead for short - because there are 8 letters between the "k" and the "s" in the word "kubernetes".

In 2014, Google published the Kubernetes source code, written in Go, in the public domain, and a year later a special Cloud Native Computing Foundation (CNCF) was founded together with the Linux Foundation, to which the developers transferred the rights to the product. Initially Docker was used as the container management engine, but in 2016 it was replaced by its own Container Runtime Interface (CRI) component, which supported other containerization systems in addition to Docker.

The container security, as well as the high speed of deployment, led to the emergence of a separate DevOps direction aimed at developing containerized applications that reduce the time to market for new projects. Since the advent of Kubernetes, most of the major cloud players, including VMWare, Azure, AWS and others, have announced support for it on top of their own infrastructures. Containerization systems are continually evolving, with new tools for creating and deploying containerized applications, as well as for managing large container clusters in data centers. Kubernetes supports increasingly complex application classes, enabling enterprises to migrate to both hybrid cloud and microservices architectures. Containerization has become the foundation of modern software infrastructure, and Kubernetes is used in most large enterprise projects today. As of June 2023, the Kubernetes project on GitHub is active community among open source products, with over 3,400 members. This indicates that container technology is very popular, and the number of companies using it to provide various services to their customers will only grow.