Analytics in business occupies a separate line on the pedestal, because thanks to it the company's turnover increases, efficiency and speed of service increase, and profit grows as well. This is a kind of monitoring of the state of your affairs, which can be interpreted through the prism of dry figures and make a forecast for further sales or, on the contrary, make a retrospective and work on mistakes.

This task requires a qualified team of analysts who have skills in using software for data collection, can programme data solutions or use ready-made ones, operate with databases. And the most important thing can summarise and analyse the received data, in order to replenish the personnel of such qualification or to increase efficiency of the existing one due to automation of business processes it is necessary a separate solution.

In this article, we will look at ways to automate the analytics of your customer interactions. Based on artificial intelligence via API from Serverspace!

The way of working with data

User feedback analysis

Let's imagine a situation when you have accumulated an array of information with feedback and messages from customers, be it from mail, on the company's website or a third-party marketplace. And you need to assess customer attitudes towards your product, analyse needs and solve problems.

In a classic scenario, you would have to rely only on numerical metrics, where the user rated the service or product. However, such analyses may not always be useful, as they do not give a complete picture of the customer's needs and the evaluation may not always be consistent.

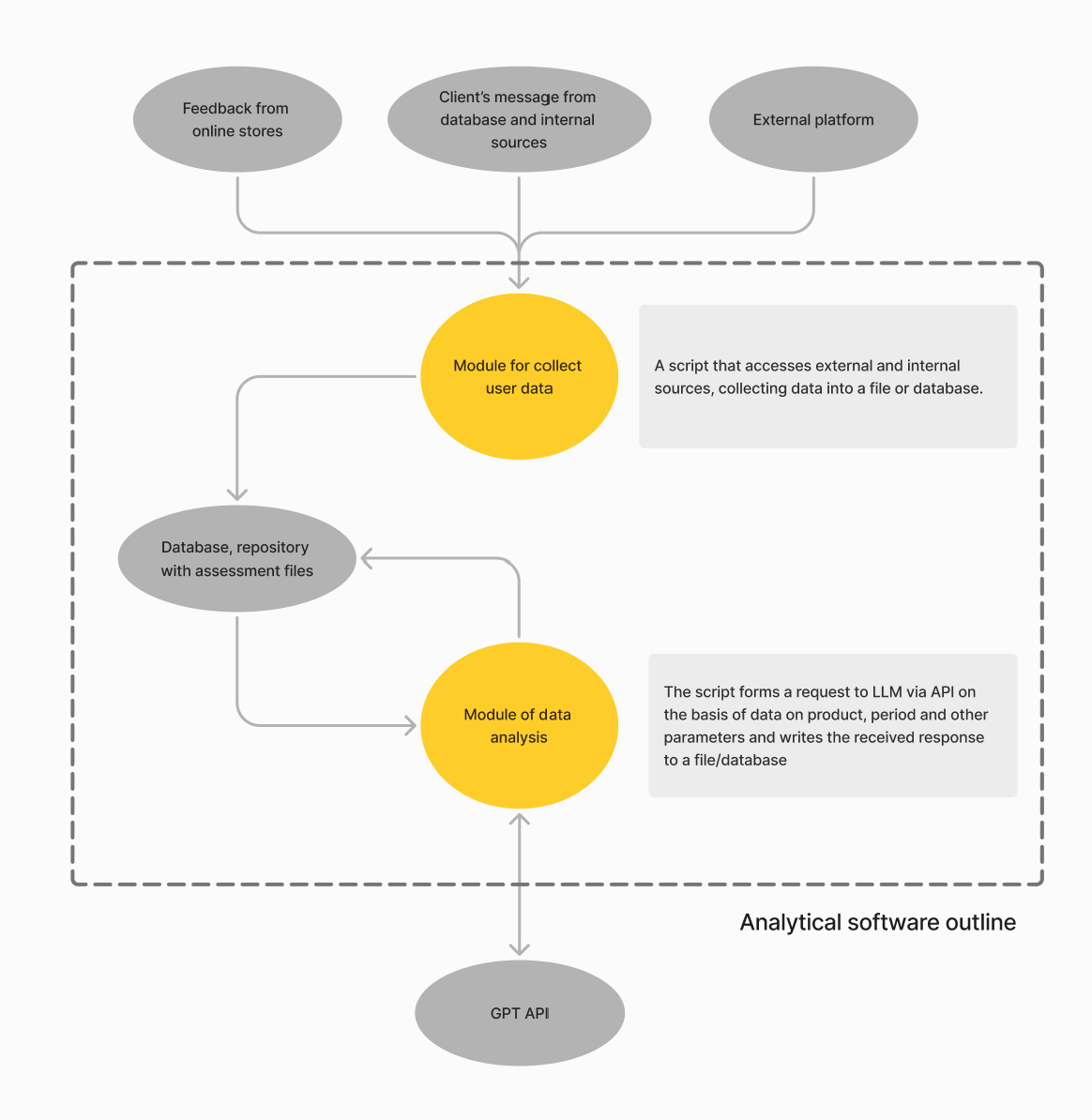

Full-text analysis is needed, taking into account lexical features, be it irony, sarcasm, etc. With further evaluation of the customer's attitude towards the product. An example of a customer feedback processing scheme might look like this:

The customer data collection module accesses external sources and requests the necessary data sets for analysis, then writes them to a database or a separate file. The analytics module requests the data set from this file and sends it to the analytics in the GPT API from Serverspace. After some time back comes the analytics on the data set, which is saved to the same database or a separate file.

Example of system work

The input is array format data with feedback, comments, and other feedback via a delimiter.

{

List of Comsumers Data

{

"Feedback-1": [

{

"comment": "For brace system too much thick thread",

"rate": "4",

"response": "Helena, hello! Thank you for your feedback! Thanks to the innovative patented technology ****, the thread ****** fluffs during use, collecting all the plaque on itself. We also recommend paying attention to our new product — interdental brush with elastic tip ***** with mint flavor, article ******. We hope that you will appreciate the product!"

"comment": "It is convenient to thread through braces, but the thread itself is very fluffy.",

"rate": "3",

"response": "Helena, hello! Thank you for your feedback! Thanks to the innovative patented technology ****, the thread ****** fluffs during use, collecting all the plaque on itself. We also recommend paying attention to our new product — interdental brush with elastic tip ***** with mint flavor, article ******. We hope that you will appreciate the product!"

"comment": "It is convenient to thread through braces, but the thread itself is very fluffy.",

"comment": "It didn't suit me. It's such nonsense.";

"rate": "4",

"response": "-"

}

]

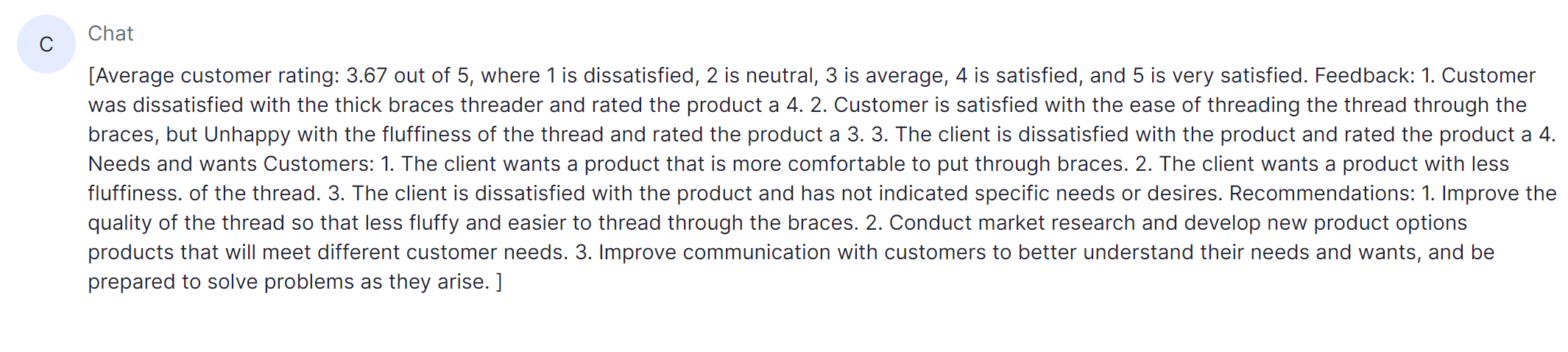

}Analytics from LLM on this set of product comments:

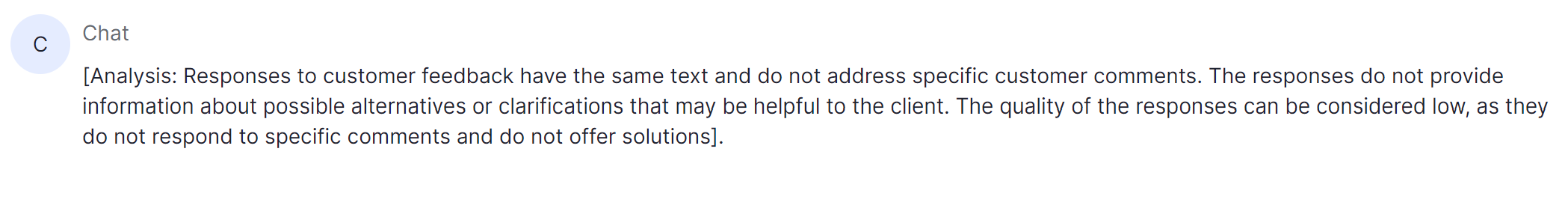

The quality and composition of analytics directly depends on the query posed to the AI GPT, it is desirable to split the tasks to the analytics module by different queries.

In this way the quality of answers will be significantly higher. The form of the request is arbitrary, it is recommended to use a structural markup language of any format.

The implementation of a customer data collection module might look like this:

import sqlite3

import requests

from bs4 import BeautifulSoup

import pandas as pd

import json

# Connect to database SQLite3

conn = sqlite3.connect('example.db')

cursor = conn.cursor()

# Download data from database's table

def fetch_data_from_db(table_name):

cursor.execute(f"SELECT * FROM {table_name}")

data = cursor.fetchall()

columns = [description[0] for description in cursor.description]

df = pd.DataFrame(data, columns=columns)

return df

# Parse data from company web-site

def parse_data_from_website(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Example of parse comment and another feedback

feedbacks = soup.find_all("div", class_="feedback__info-header")

results = []

for feedback in feedbacks:

# take a note, that you need to form parse by real site structure

comment = feedback.find("div", class_="comment").get_text(strip=True)

rate = feedback.find("div", class_="rate").get_text(strip=True)

response = feedback.find("div", class_="response").get_text(strip=True)

results.append({

"comment": comment,

"rate": rate,

"response": response

})

return results

# Write data to the table in JSON format

def write_to_new_table(json_data, table_name):

cursor.execute(f"""

CREATE TABLE IF NOT EXISTS {table_name} (

id INTEGER PRIMARY KEY AUTOINCREMENT,

data JSON

)

""")

cursor.execute(f"INSERT INTO {table_name} (data) VALUES (?)", (json_data,))

conn.commit()

# Main function

def main():

# Set the URL for parsing and the name of the existing table

url = 'http://example.com'

existing_table = 'source_table'

new_table = 'parsed_data'

# Retrieving data from the database

db_data = fetch_data_from_db(existing_table)

# Parsing data from a website

web_data = parse_data_from_website(url)

# Structuring data in JSON format

json_structure = {

"List of Consumers Data": {

"Feedback-1": web_data

}

}

# Converting data to JSON format

json_data = json.dumps(json_structure, ensure_ascii=False)

# Writing data to a new table

write_to_new_table(json_data, new_table)

print("Data successfully loaded in the table", new_table)

# Running the script

if __name__ == "__main__":

main()

# Close connection with the database

conn.close()Explanation of the operation of the data collection module

- Database Connection: The script connects to the database using sqlite3.

- Retrieving data from the database: The fetch_data_from_db function fetches data from an existing table.

- Parsing data from website: In the parse_data_from_website function, we collect comments, ratings and responses from a web page and form them into a structure that conforms to JSON format.

- Creating a JSON structure: The parsing results in a JSON structure that includes: ‘List of Consumers Data’ is the primary key that contains a nested object named ‘Feedback-1’. It contains an array, each element of which is a dictionary with the keys ‘comment’, ‘rate’ and ‘response’.

- Writing data to a table: The combined data is converted to a JSON string and written to a new database table.

Be sure to adapt the data parsing in parse_data_from_website to match the actual HTML structure on your site. If you need to add additional data (e.g. from the database), you can extend the JSON structure accordingly.

For the analytics module, the implementation can take the following form:

import sqlite3

import requests

import json

# Connect to SQLite database

conn = sqlite3.connect(‘example.db’)

cursor = conn.cursor()

# Retrieving data from the database

def fetch_json_from_db(table_name):

cursor.execute(f ‘SELECT data FROM {table_name}’)

data = cursor.fetchall()

# Fetch the first result if there are multiple records in the table

if data:

return data[0][0] # The first value of the first row (assuming it contains JSON)

return None

# Main function

def main():

table_name = ‘parsed_data’

# Retrieve data in JSON format from the database

json_data = fetch_json_from_db(table_name)

If json_data:

# Convert the JSON string into a Python object for modification

json_object = json.loads(json_data)

# Example of extracting a piece of data for use in a query

feedback = json_object.get(‘List of Consumers Data’, {}).get(‘Feedback-1’, [])[0]

# Form the body of the query based on the extracted data

payload = {

{ ‘model’: ‘openchat-3.5-0106’,

‘max_tokens": 1024,

‘top_p": 0.1,

‘temperature": 0.6,

‘messages": [Pretend you are a data analyst and give a score for this dataset based on the fields provided

{

{ ‘role’: { ‘user’,

‘content‘: f “comment: {feedback[”comment’]}, rate: {feedback[‘rate’]}, response: {feedback[‘response’]}’

}

]

}

# Setting the URL and headers

url = ‘https://gpt.serverspace.us/v1/chat/completions’

headers = {

{ ‘accept’: ‘application/json’,

‘Content-Type": “application/json”,

‘Authorisation": ‘Bearer Fh/ReWcly3HndpNdG98hasdasdasdSADhbzgzgzQLVsdsdshf7WOwvm1cAZaVFIUPbxnxsyVpSLghCh32ScBcmnLKF5mb1EU4Q=="’

}

# Send POST request

response = requests.post(url, headers=headers, data=json.dumps(payload))

# Process the response

if response.status_code == 200:

print(‘Request successfully completed. server response:’)

print(response.json())

else:

print(f ‘Error: {response.status_code}’)

print(response.text)

else:

print(‘Failed to retrieve data from database.’)

# Run the script

if __name__ == ‘__main__’:

main()

# Close the database connection

conn.close()

Explanation of the operation of the analytical module:

- Database connection and data extraction: The script connects to a SQLite database and extracts data in JSON format from the specified table. In this example, it is assumed that the parsed_data table contains a data column where the JSON string is stored.

- Forming the request body: The first element from the ‘Feedback-1’ array in JSON is extracted to use it to form the request. The content field of the API request is passed the data in the desired format.

- Send POST request: Uses requests.post() to send the request to the specified URL. Headers include Authorisation for authentication.

- Response Processing: The script checks the status of the response. If the request is successful, the response from the server is printed. If an error occurred, an error code and the response text are output.

The query parameter ‘Represent yourself as a data analyst’ is necessary because the model is not from the fine-tuning class. Also for ease of use integration with Web-application for database management and uploading of analytics results is possible. GPT API service from Serverspace can be used in the architecture of any tool that can work with HTTP/S requests, which makes it a universal solution in optimising business processes.

The main requirement for working with the service is the presence of data collection modules or prepared datasets that can be passed for analysis with a certain request. For example, in many marketplaces, analytics from personal accounts can be downloaded via API and used in the same way in their own solutions based on Serverspace's GPT API.

At the moment, the management of the personal account can hardly be fully entrusted to the service, however, the scenarios of use in data analytics with the help of GPT API shows excellent results!

As a result, the process of customer feedback is automated and put on stream, which means that it will be much easier to get timely analytics on statistics on customer response and needs!