One of the most revolutionary technologies has been virtualisation/containerisation, literally, decoupling the concept of one machine - one service.Whereas before companies rented a whole server to solve their tasks, now it is an open service for managing virtual spaces, where everyone has a separate file, process and network environment.

Many projects are already built on such a concept, where information systems can spin up on a single server.But in such cases it is necessary to clearly understand how networking works in virtualisation platforms.In this material we will consider the practical implementation of building a network infrastructure for containers.

Algorithm of networking in the OS

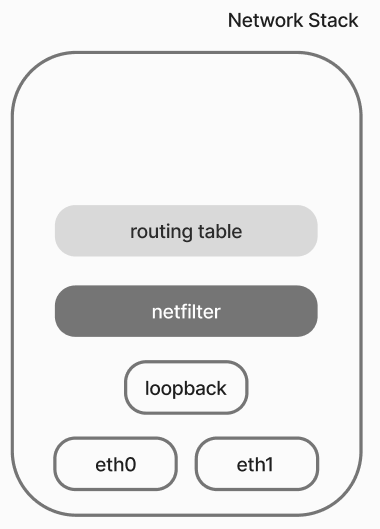

To understand the principle of container communication, it is necessary to consider the network subsystem in the OS.It is a set of interfaces, configs, netfilter mechanism, and routing tables.

- The network packet arrives at the interface after processing by the driver, from this point the OS starts working with it;

- After that it gets to the routing mechanism, which is represented by a scheme with two possible branching options: to redirection and to the network stack to the application for processing;

- At the traffic routing points there is packet filtering, which allows the packets to change their content and filter as they pass through the main chain;

- Afterwards, traffic exits the network from the machine interface

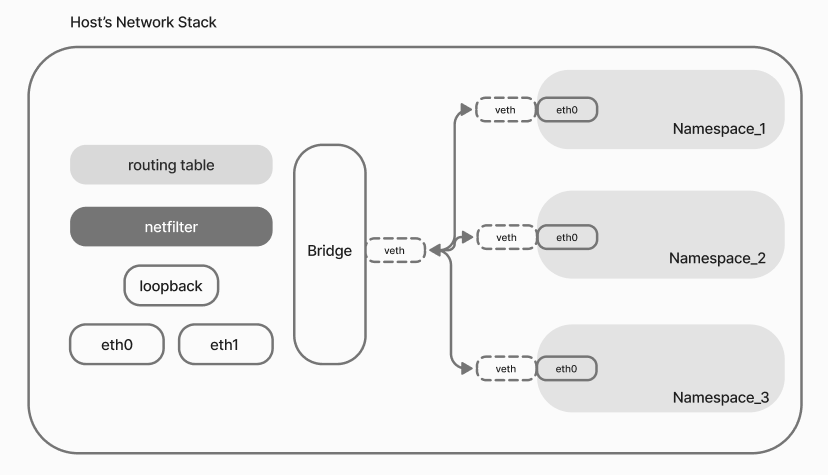

The presented set of objects has almost everything to run a virtual infrastructure, interfaces, a router in the form of a table of routes, only missing is a switch to organise the channel environment.Therefore, in order for networks to work correctly there are virtual network devices.

Now the scheme of the network stack with container networks looks like this:

Each container has its own namespace with interfaces, its own netfilter and routing table, but we are only interested in their interfaces here. In fact, without additional virtual solutions, the two spaces cannot communicate.

It is necessary to bring them into one host namespace, for traffic copying we will use a pair of virtual interfaces veth. They will allow us to transfer traffic copies from one to the other interface in different environments. Thus we will get the traffic of our isolated spaces, the only thing left is to attach them to the virtual device/stack interface - bridge.

The name may confuse you, but this is a full-fledged L3 switch, since it is an interface and is connected to our tail stack, it can route traffic to the OS. Now knowing how a classic virtual infrastructure works, you can look at the proposed networking options in Docker Network and implement your own from them!

Types of container connections

Classically, there are several drivers for forming a network in the OS stack:

- bridge - network with a switch, allows you to create hidden networks inside the OS with its own switching and routing;

- host - host namespace is set for processes, without creating additional network environments;

- none - no network stack for the container, and therefore no connection to any networks;

- overlay - using several dockerd to form a single network;

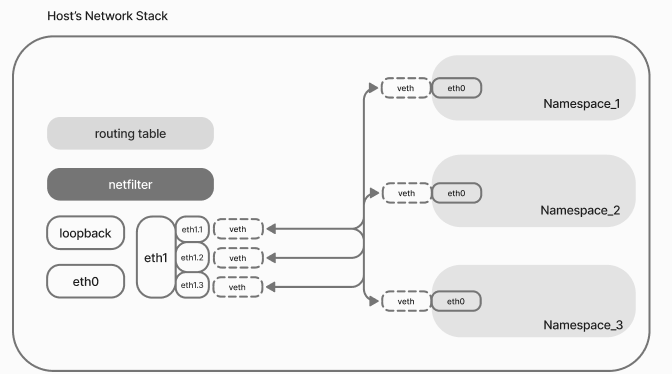

- macvlan - represents a classic bridge, where sub-interfaces are set for the host interface, which are already used for communication with the external network by the containers themselves;

- ipvlan - similar to macvlan, only on the basis of IP-addresses.

In this article we will consider the configuration of the main and priority of the presented methods!

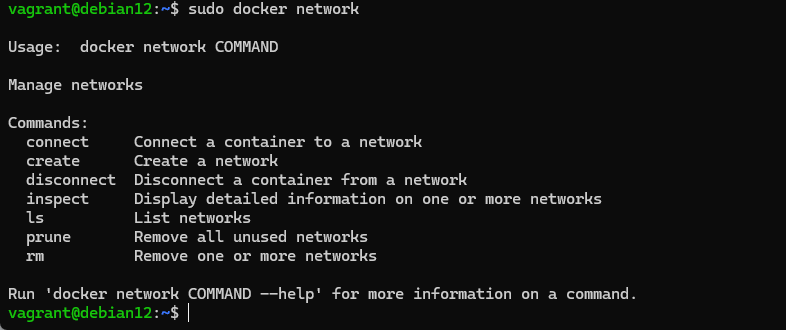

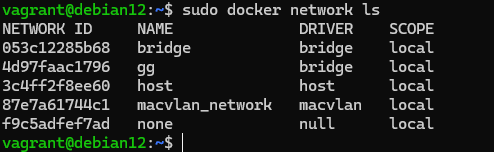

docker network

The help provides basic information about the options for connecting new networks and containers to them.

Bridging or MacVlan

Let's consider a purely operational task, where we have a set of containers representing an IS or information system. In such cases try to adhere to the concept of easy management of nodes, as well as high network performance.

So we configure MacVlan or bridge, which will allow through the host communication channel to address from the sub-interface associated with the host, but already with its own configuration parameters. Thus the device will be considered as a separate device in the network! Note that veth is used to redirect traffic, which is not a classic interface.

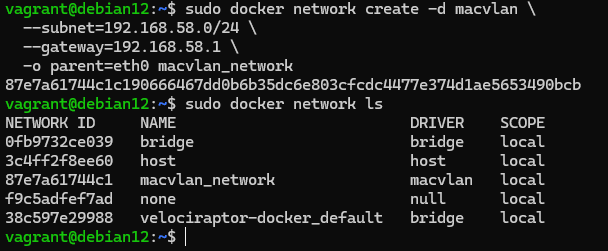

sudo docker network create -d macvlan \

--subnet=192.168.58.0/24 \

--gateway=192.168.58.1

-o parent=eth0 macvlan_network

Where the -d parameter defines the driver to be used, --subnet the address of the network where identification will take place, --gateway the gateway of the connection, and parent the parent interface through whose channel the traffic will go. Next, let's create a container where we specify the basic options:

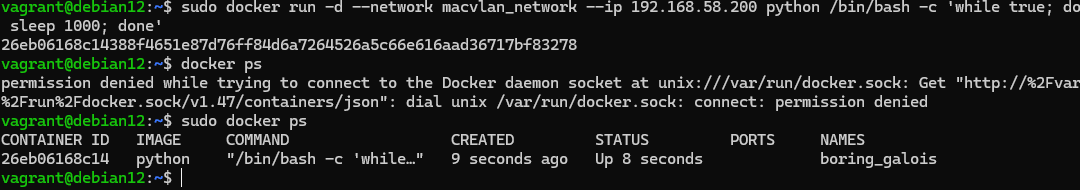

sudo docker run --network macvlan_network --ip 192.168.58.200 python /bin/bash -c 'while true; do sleep 1000; done'Where to the container you specified macvlan_network and its IP address manually, but if you don't specify it, Docker will pick a value on its own, which can cause collisions.

Great! The container in bridge mode has been started, let's check the availability of the network router and other resources from it:

sudo docker exec boring_galois /bin/bash ping 192.168.58.1 ping google.com

After testing we can start using the interface and prescribe the network segmentation for the new infrastructure!

Connecting via NAT/LAN or bridge driver

You can also use alternative ways of connection, where the network segmentation is more rigid or if you have not yet allocated addresses for the future IS. The bridge driver allows you to use an internal switch and routing table to create a hidden network inside the host and use its connection to external segments.

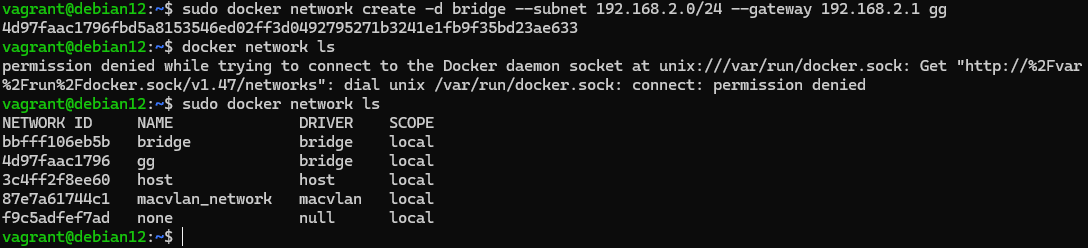

For this purpose we will also create a mesh beforehand, where we will specify its basic parameters:

sudo docker network create -d bridge --subnet=192.168.2.0.0/24 --gateway=192.168.2.1

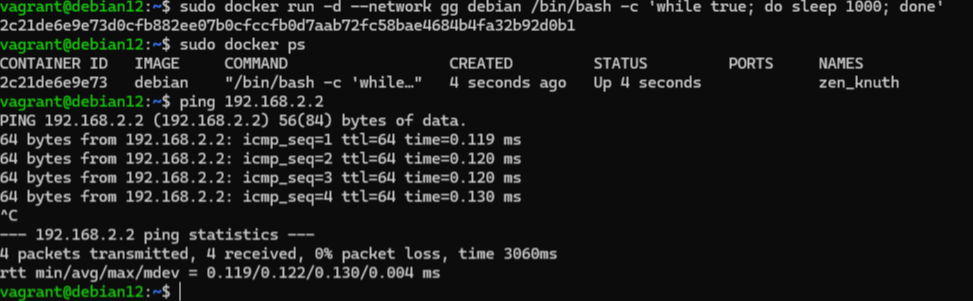

And after that let's connect the container to it with the command:

sudo docker run -d --network gg debian bash -c ‘while true; do sleep 1000; done’ After that, let's check the availability of our new node via the command:

ping 192.168.2.2.2

Great, everything is functioning correctly, while in the previous case study you can occupy sockets without port forwarding, in this case you need to route traffic for the application to a hidden network:

sudo docker run -d -p 80:80 --network gg debian bash -c 'while true; do sleep 1000; done' After that, when our device is accessed on port 80, the Docker service will proxy the connection to the right container. To view the configuration of the current bridge, you can use the command:

sudo docker network inspect There you can also find the IP address of the host address on that network, usually labelled as gateway.

Connection types with none and host drivers

The other connection types are variants of the network and host stack. In the first case, the container has its own created network stack, but it is not connected to any tail interfaces, so communication is not possible. It is specified in the same way as the other solutions:

sudo docker network create -d none

Note that there can only be one such ‘network’, because all containers connected to it will be a priori vethless and therefore isolated. Such solutions can be useful when you need a network stack on the OS, but no network connections in the infrastructure.

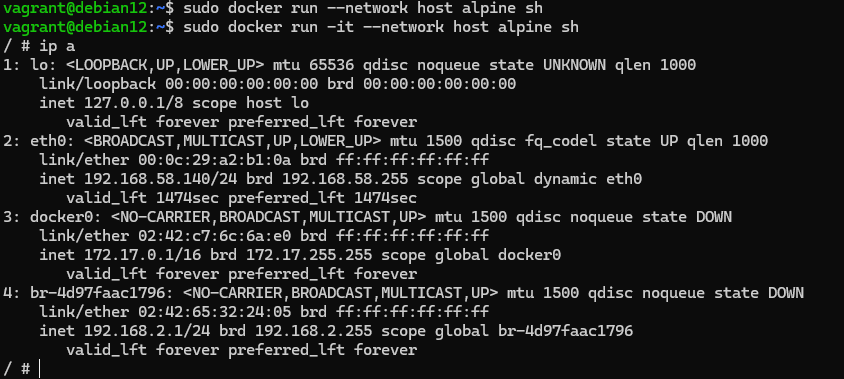

The host driver replaces the creation of a new network namespace with the use of the host one, this stack is attached to the process like all standard ones and allows to work directly with interfaces and sockets of the network stack. It is set with the command:

sudo docker run -it --network host alpine sh

After that we have all the classic interfaces of our host available through which we can start communicating. Any of the above types of network connectivity in Docker allows us to solve specific problems, for macvlan direct connection to the network, for bridge the use of hidden networks using NAT technology, and host and none - the use of host stack and container isolation, respectively.

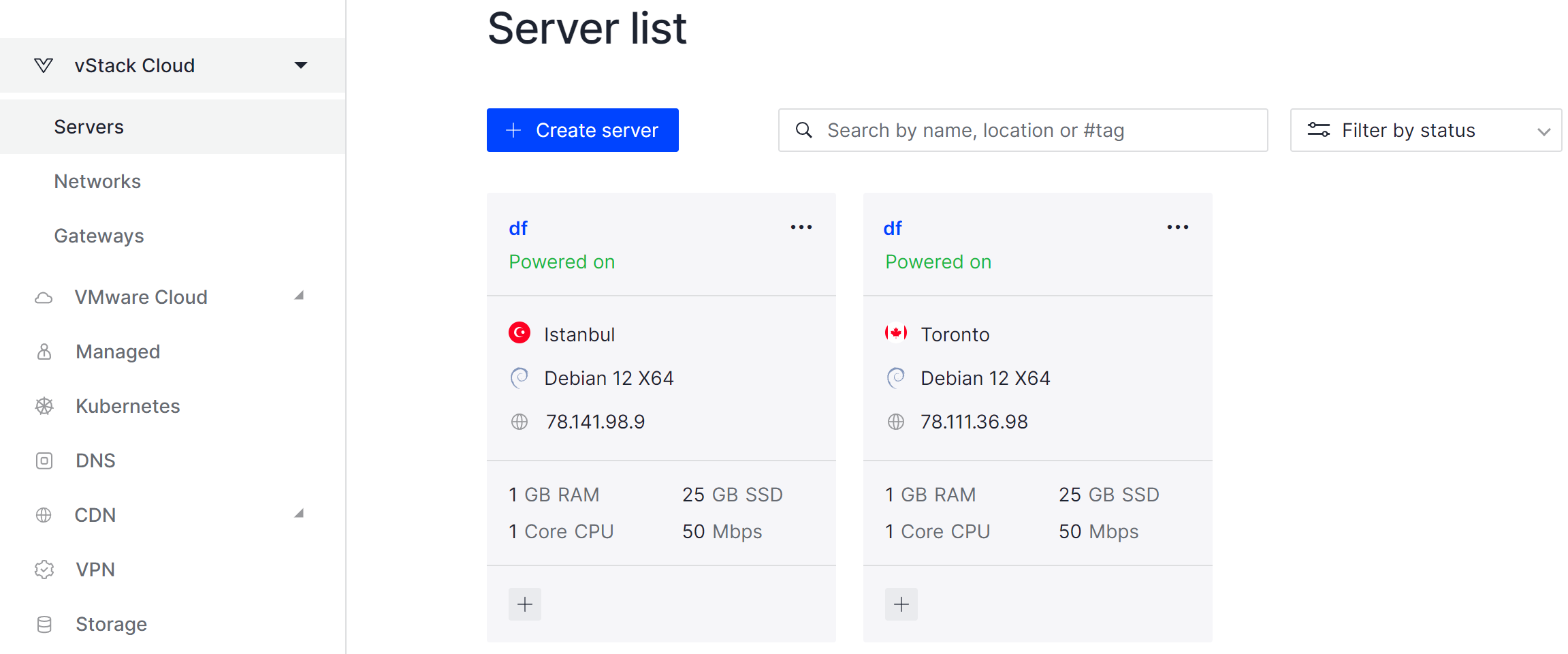

If you don't have sufficient resources than you can perform actions on powerful cloud servers. Serverspace provides isolated VPS / VDS servers for common and virtualize usage.

It will take some time to deploy the server capacity. After that you can connect in any of the convenient ways. This infrastructure service is an integral part of the corporate network and security subsystem, allowing to implement authorisation, content filtering, as well as being a gateway for users to control visited resources.