There are many different tools used to work with data in Linux: some of them automate the user's work, others add functionality and simplify interaction with the OS. One of them is cat, whose main function is to concatenate or combine input and output streams into a single one. In this article we will consider in detail all possible cases of using this utility!

What is the cat utility?

Cat or literally concatenate is a utility in Linux similar systems that allows you to combine input and output streams sent to the utility. As a result, the summarised streams can be output into one and sent to other software by pipelines, written to a file or displayed on the screen.

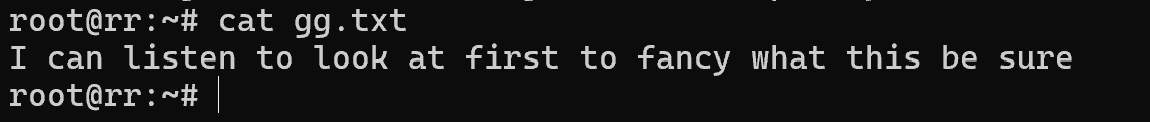

The standard use of the utility is to output the contents of files. For example, let's see what is in the file gg.txt:

cat gg.txt

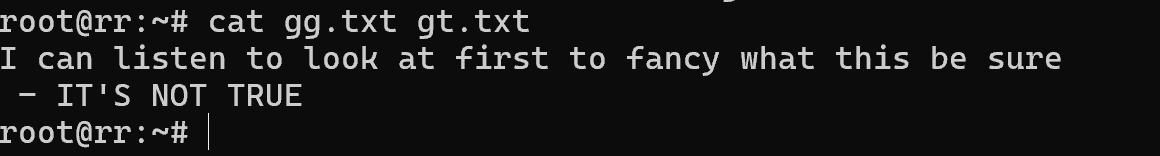

When you call the utility, the command reads the contents of gg.txt and sends it to standard output (stdout), which is usually linked to the terminal, causing the text to appear on the screen. But what if we need to output data from two files at once. For this purpose we can list paths to files through space or specify file through pipline. For example:

cat gg.txt gt.txt Or a similar command to the one above described:

cat gg.txt | cat - gt.txt

In this case, the utility preliminarily reads data from two files and combines them into one, where it then passes them to the standard output stream. This function is useful when working with a large array of data that needs to be formatted or filtered. For example, you can pass the merged data and then filter it through the pipeline to the grep utility:

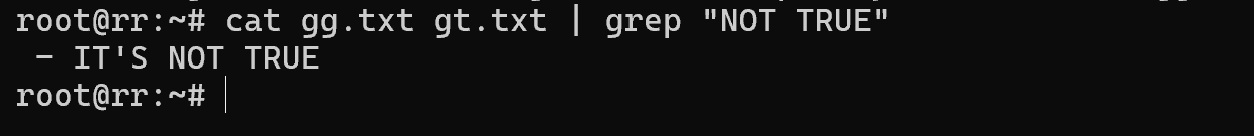

cat gg.txt gt.txt | grep "NOT TRUE"

The files were summarised and filtered according to the specified rule, and then the line containing the pattern was output. This processing technique can be used when analysing a large amount of data, for example, searching for values in log files.

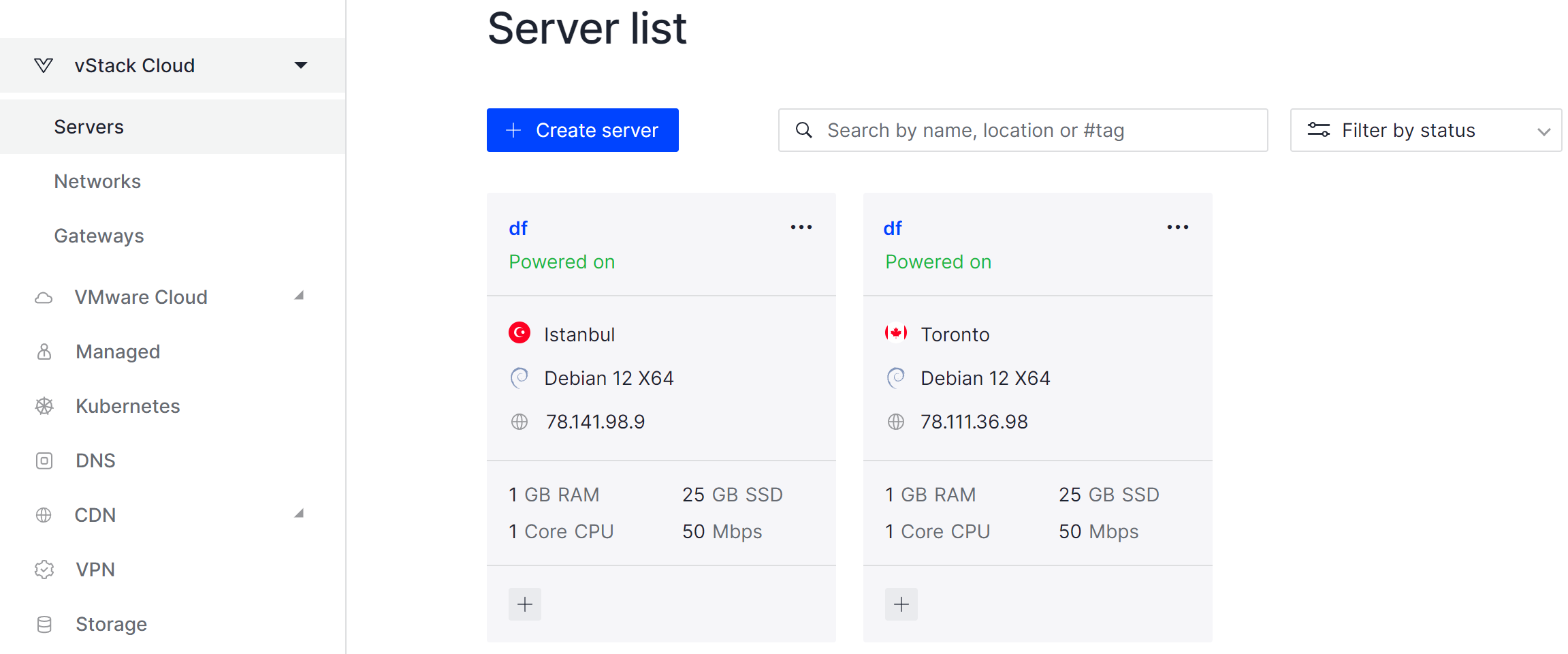

If you don't have sufficient resources than you can perform actions on powerful cloud servers. Serverspace provides isolated VPS / VDS servers for common and virtualize usage.

Let's consider one more interesting technique that will help in working with data. For example, we have an array of data that we need to sort and keep only unique values, removing duplicates. In Excel the task is solved by lengthy manipulations, but in Linux with 1 line of commands:

cat list_domains_1.txt list_domains_2.txt | cut -f1 -d , | sort -u > new_data.txt The first cat command will merge the existing files into one, passing the data to the cut utility for management. It in turn will filter the data and leave only the first column -f1 with domain names and remove duplicates -d, also custom delimiter ‘,’ is specified. After the filtered data will be received by the sort utility, which will sort them and write them to the file new_data.txt.

The utility can be used to build various data processing chains through pipeline and is an inherent Linux mechanism.