Starting a containerised infrastructure can be a lengthy process, part of which is the installation and configuration of services. For small and medium-sized projects, this can take time, and training specialists to use the CLI client to delegate responsibilities can take a long time. In order to lower the entry threshold and make Docker more accessible, the Docker Desktop solution was developed. In the last piece we broke down the installation process and a breakdown of platform requirements, be sure to check it out if you haven't installed Docker Desktop. In this tutorial, let's take a look at the operation and configuration of the Docker Desktop product.

What is Docker Desktop?

Docker Desktop is Docker's product for deploying out-of-the-box applications and services with packed dependencies based on a containerised solution with modules that provide a complete software delivery and integration cycle.

This means that the same file, network, processor environment is created in the OS, which is separated from the main OS at the logical level, in the form of a restricted process. A basic OS with a minimal set of utilities for operation is loaded inside, the solution is built on its basis, so the system a priori does not depend on the host OS and a common kernel is used.

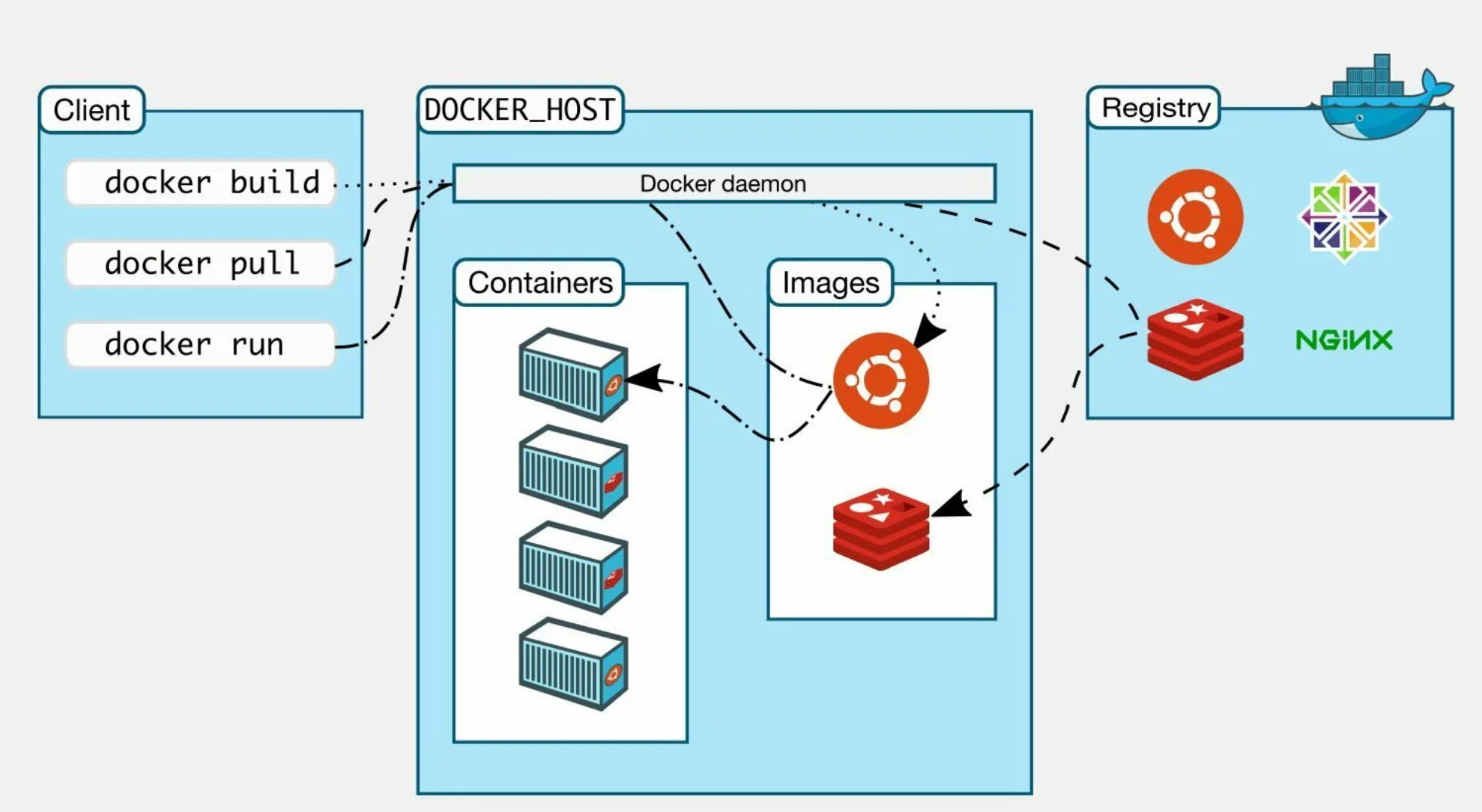

The diagram below summarises the operation of the service, where the client sends a command to the daemon, which in turn creates isolated environments or containers based on selected images. Consequently, a physical device can be ‘split’ and many services, systems and applications can be used on one device. The only thing that will bind them together is the host OS kernel. How to work with Docker Desktop?

How to work with Docker Desktop?

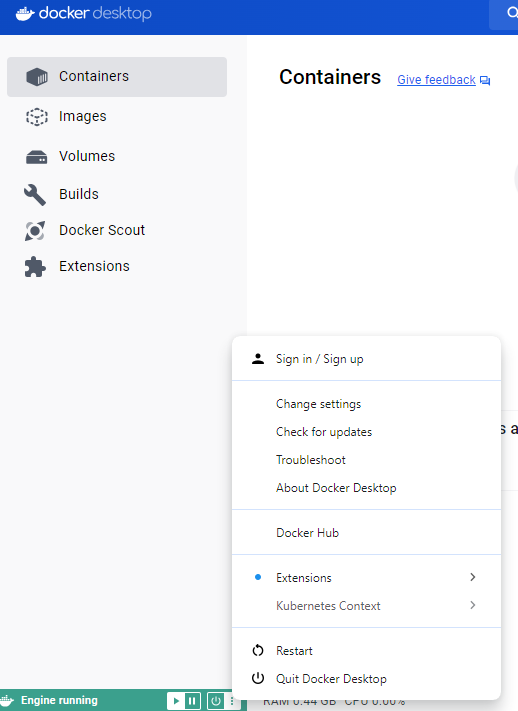

Let's open the application and look at the window with the control panel, on the left are the main blocks to work with:

- Containers, the list of stopped and running containers, as well as their configuration and management;

- Images, the local repository of downloaded or built images, as well as external sources;

- Volumes, separate file environments that allow you to use an isolated file environment instead of mapping a host disc;

- Builder, will assemble all required dependencies into a single automated image;

- Docker Scout container scanner for vulnerabilities;

- Extensions for containers.

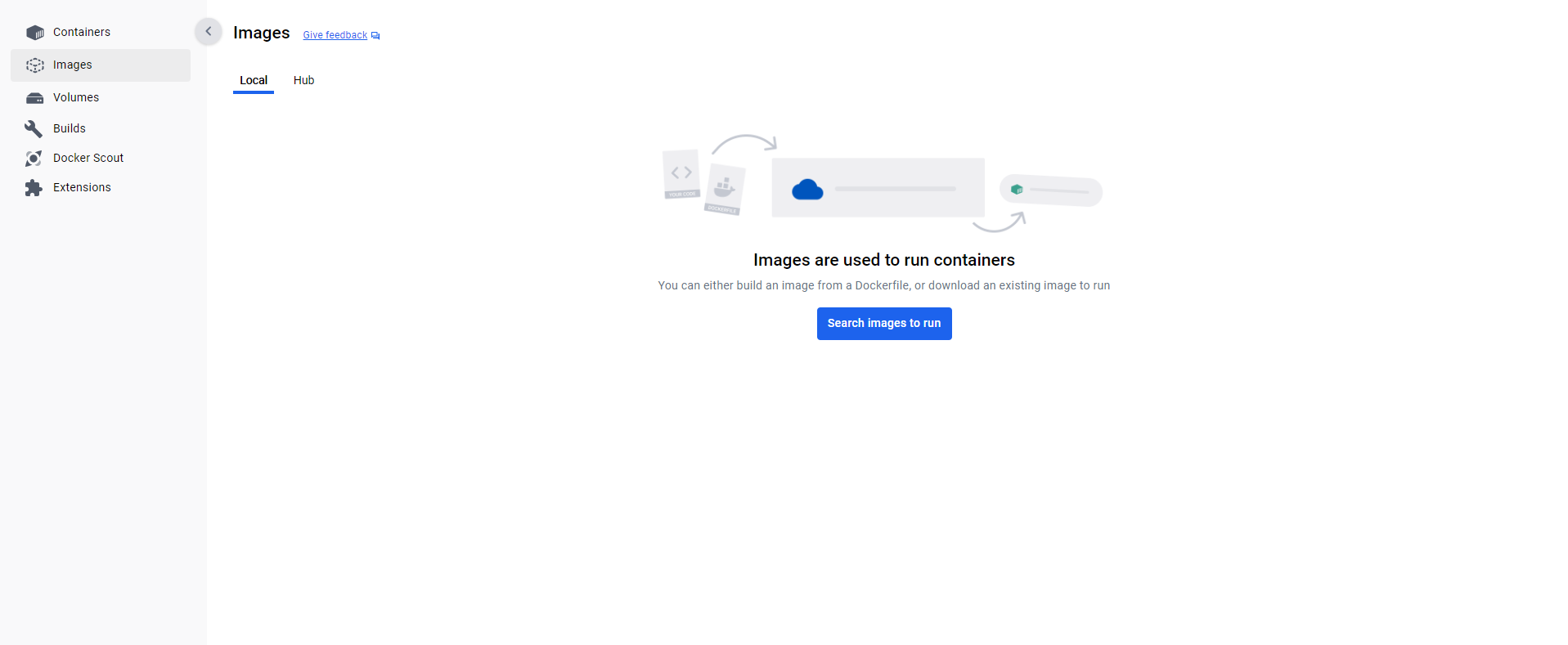

And everything is presented in a single control panel, this approach optimises and speeds up work with services and applications. The first step when working with containers is to build or download a ready-made image, let's go to the Images tab.

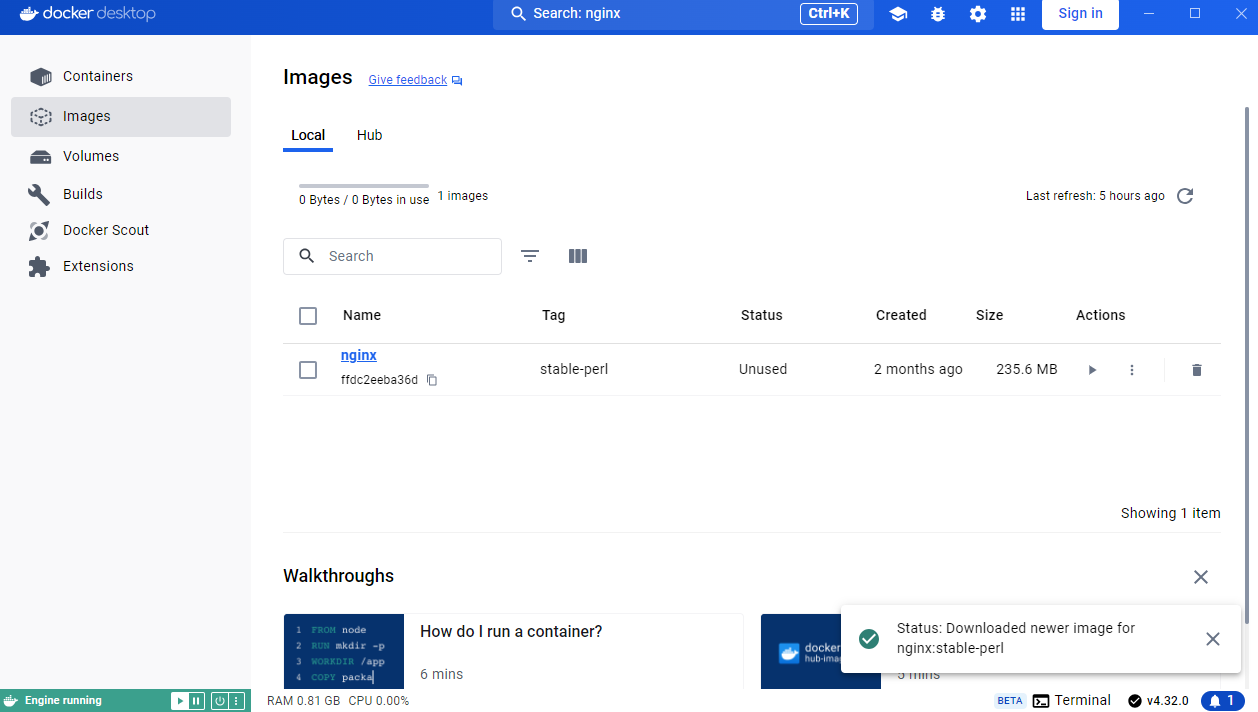

While this panel is empty, let's download the nginx image from the Docker repository. To do this, click Search images to run and type in the name of the service. In the drop-down list select the necessary one and download the image.

The container is represented as a string with name, ID and tags, as well as status and other meta-data. On the right side are the control buttons. In the classic view there was a file docker-compose.yml or a list of settings to run the image in the container, now the list is automatically created and optionally modified when the container is started.

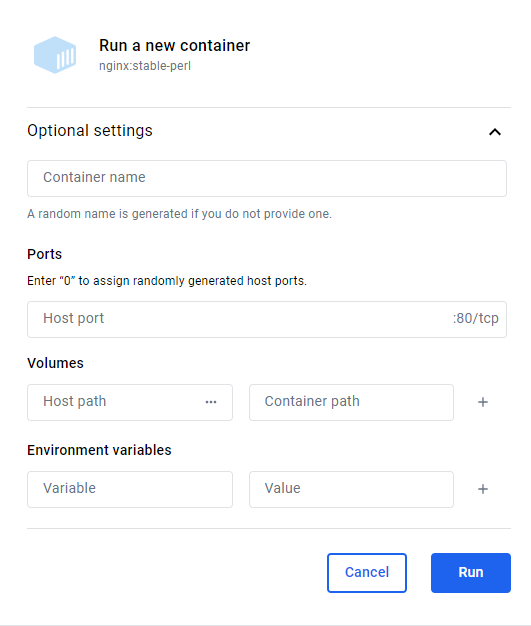

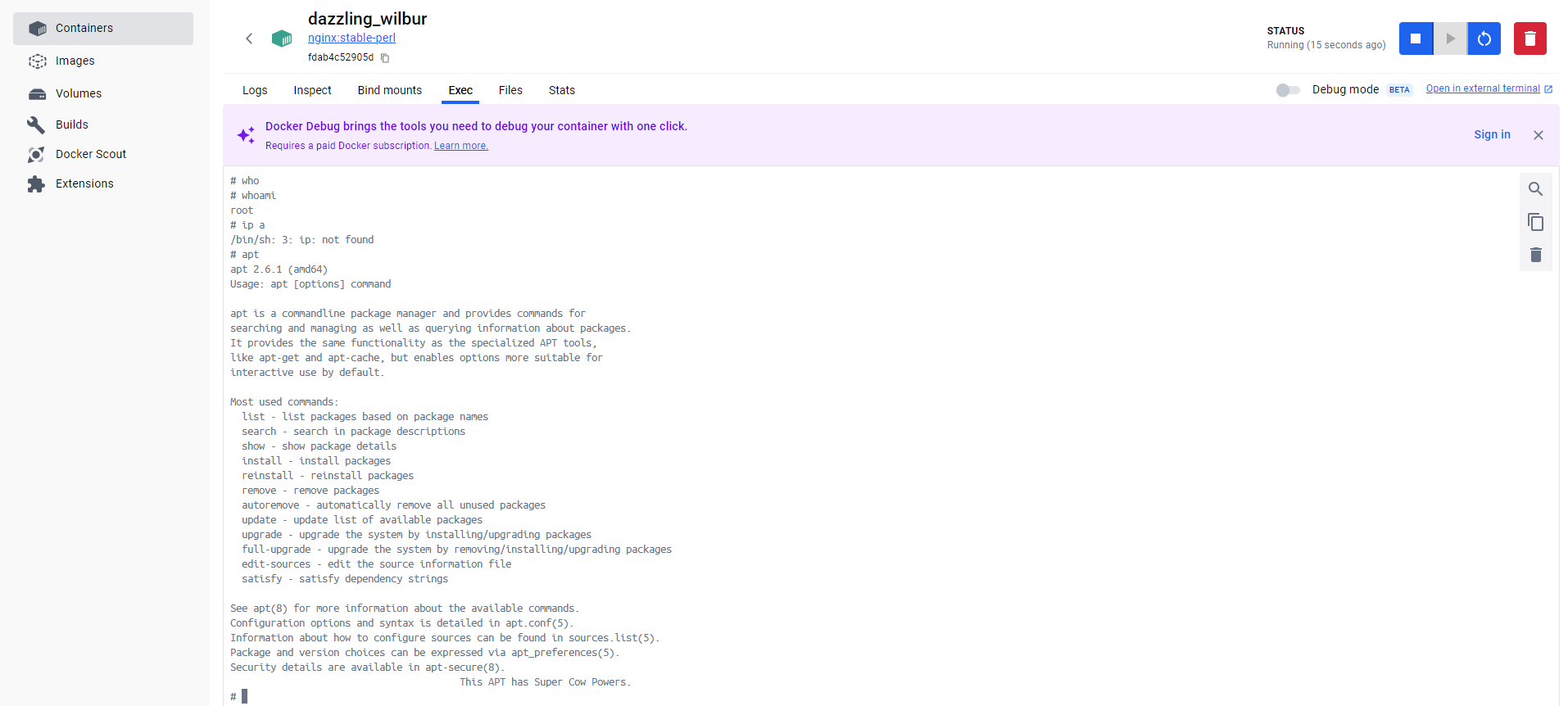

You can set a custom name for the container, throw the appropriate ports, as well as connect volumes with files and environment variables for work or leave everything by default. Let's start the container and enter it, to do this, click the start button and go to the Containers tab, where we will select the running one with our name. The Exec tab allows you to connect to the terminal of the container itself and execute commands.

It is also possible to see the logs, connected files, ports, statistics and scan the container for vulnerabilities.

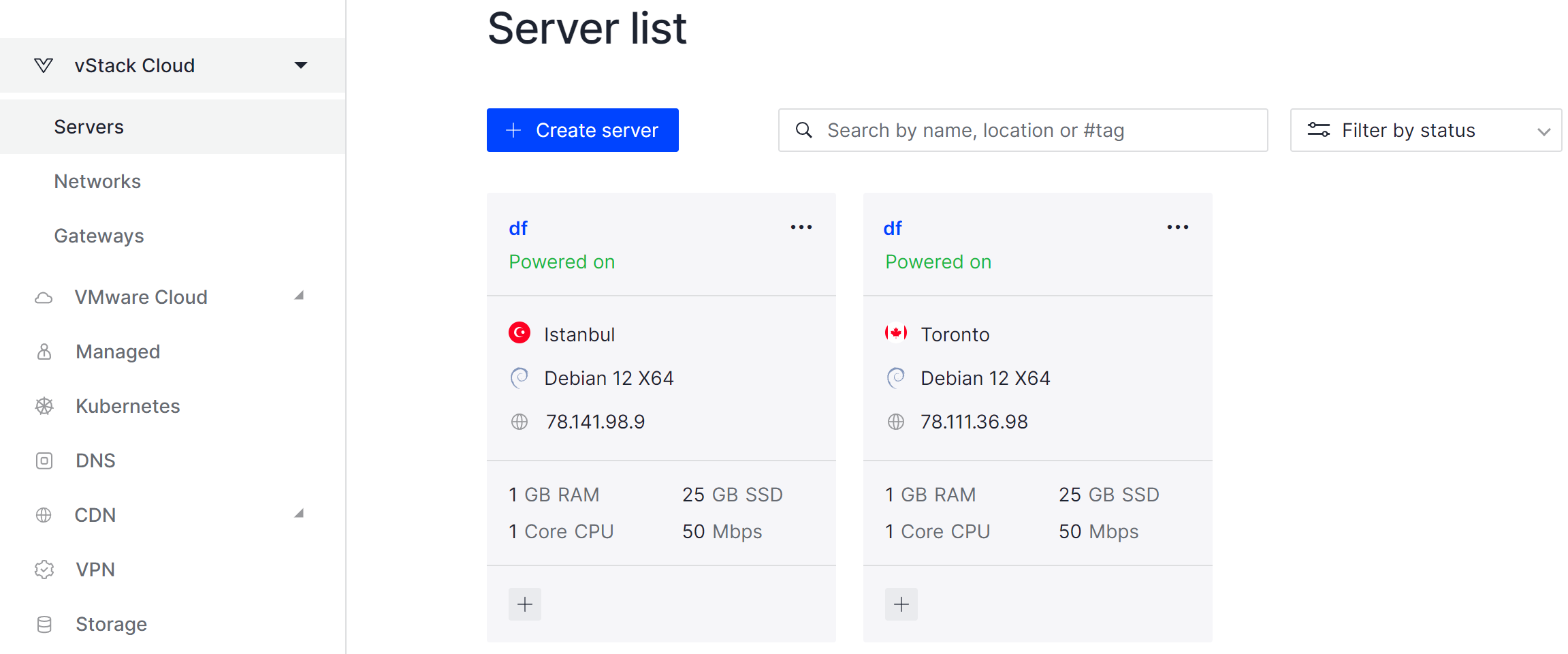

All steps in the tutorial can be performed on powerful cloud servers. Serverspace provides isolated VPS / VDS servers for common and virtualize usage.

It will take some time to deploy server capacity. After that you can connect in any of the convenient ways.

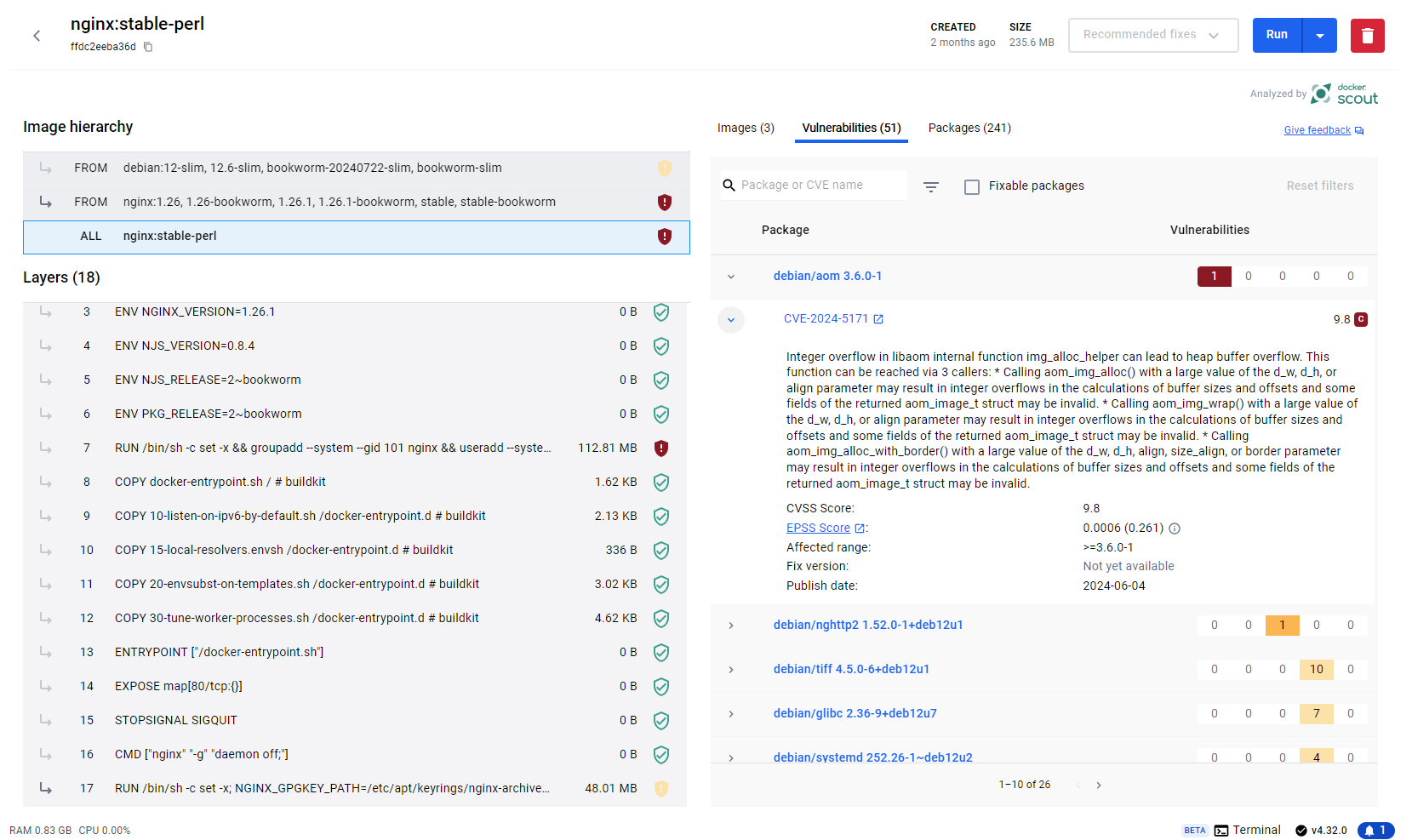

Vulnerability Management is being implemented almost everywhere, and the Docker Scout tool has been developed just for management and patching. Inspection can be performed from the container window in the Inspect tab manually, or select the Scout tool on the left side of the panel.

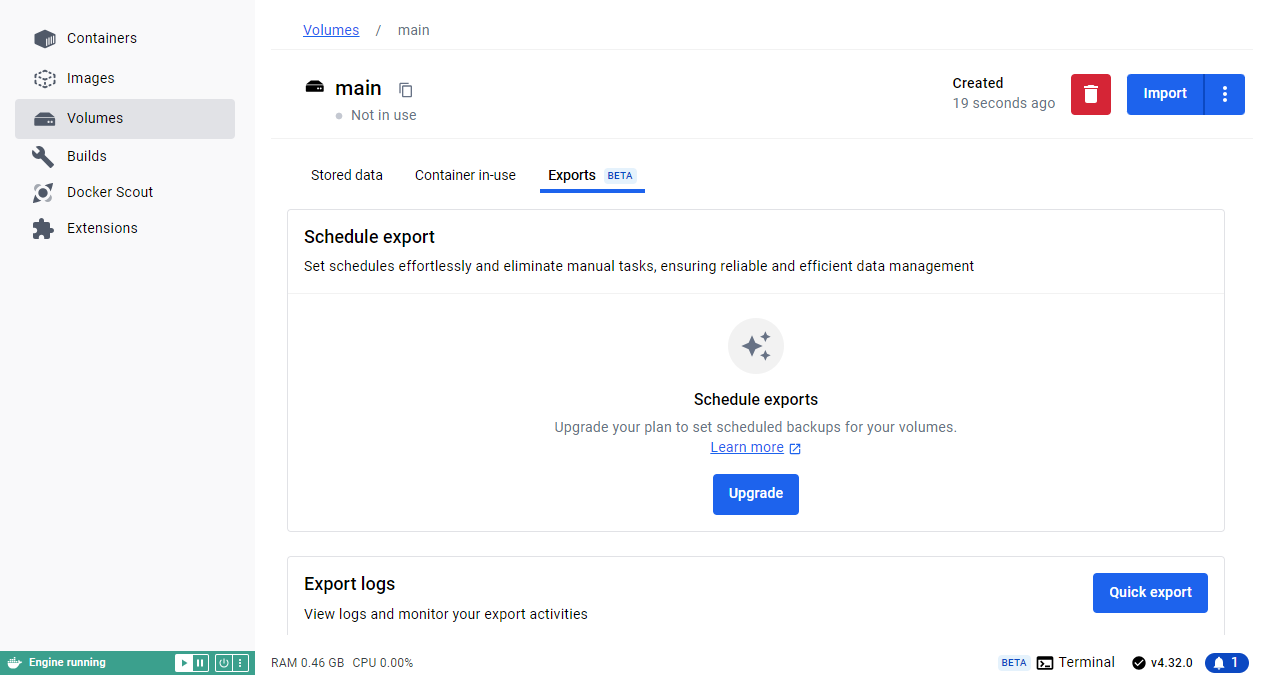

The tool analyses the contents of the container in the form of software executables, configs and the Docker File where Docker Layers are stored. Based on the versions of the found and used software, it searches the CVE database and offers to update the vulnerable software. In order to minimise interaction with the host system, you can create a virtual volume. To do this, go to the Volumes tab and create a volume according to the same principle.

In this panel it is possible to import files to a volume, use connection of containers to the volume, cloning, backups. All this allows you to create a single storage for many services or on the contrary isolated environments with files for each of the containers. All these scenarios are possible thanks to the flexible virtual volumes feature. Also, please note that on the bottom left is the Docker Engine control panel, where you can manage the daemon.

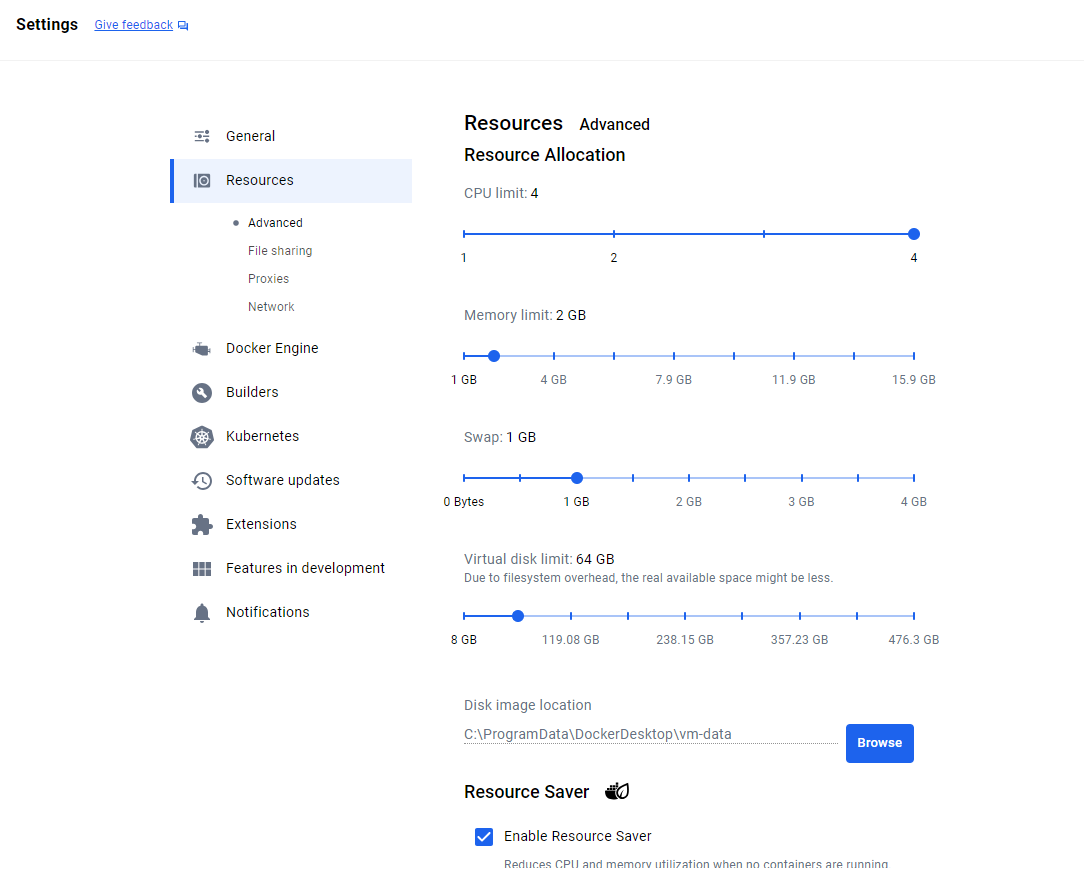

There is a gear on the top left that allows you to configure networking, proxying, and resource usage in Docker. Using sliders you can interactively define limits for resource utilisation on the host OS, if necessary. There is also a power saving feature that allows you to reduce CPU load and reduce RAM consumption.

This product will allow you to quickly create the necessary container infrastructure and delegate tasks to beginners. Kubernetes and Docker Build items can be discussed in detail in separate knowledge base guides!

Conclusion

Docker Desktop simplifies the process of building and managing containerized applications on Windows. By providing a graphical interface and integrated tools for container, image, volume, and vulnerability management, it reduces the learning curve for new users while maintaining the full power of Docker. With Docker Desktop, you can quickly deploy isolated environments, manage resources efficiently, and secure containers with tools like Docker Scout. Whether for local development or on cloud servers, Docker Desktop makes container management accessible, streamlined, and highly productive.

FAQ

- Q1: What is Docker Desktop?

A: Docker Desktop is a Docker solution for Windows and Mac that provides an out-of-the-box environment to run containers, manage images, volumes, and services via a graphical interface. - Q2: How do I start a container in Docker Desktop?

A: Go to the Images tab, download or build an image, then go to the Containers tab, click Start, and optionally use the Exec tab to access the container terminal. - Q3: Can I manage container resources like CPU and RAM?

A: Yes. In the Docker Engine settings, you can configure CPU, RAM, and other resource limits using interactive sliders, and enable power-saving features to optimize usage. - Q4: How can I secure my containers?

A: Use Docker Scout to scan containers for vulnerabilities. It checks software versions against the CVE database and recommends updates or patches. - Q5: What are Docker volumes and why use them?

A: Volumes are isolated storage environments for containers. They allow persistent data storage, sharing between containers, and can be cloned or backed up independently from the host OS. - Q6: Can beginners use Docker Desktop easily?

A: Yes. The graphical interface, control panels, and automated configuration make Docker Desktop accessible for beginners, while advanced users can still use CLI commands and full Docker functionality. - Q7: Does Docker Desktop work with cloud servers?

A: Absolutely. All Docker Desktop workflows, including container creation, image management, and volumes, can be performed on VPS or VDS cloud servers.